This is the fifth article in a series of articles about the Batch API in Drupal. The Batch API is a system in Drupal that allows data to be processed in small chunks in order to prevent timeout errors or memory problems.

So far in this series we have looked at creating a batch process using a form, followed by creating a batch class so that batches can be run through Drush, using the finished state to control batch processing and then processing CSV files through a batch process. All of these articles give a good grounding of how to use the Drupal Batch API.

In this article we will take a closer look at how the batch system processes items by creating a batch run inside an already running batch process. This will show how batch systems run and what happens when you try to add additional operations to a running batch.

Let's setup the initial batch operation.

Setting Up The Batch

The setup for this batch process is similar to the batch processes on the other articles. This will kick off a batch process that will process 1,000 items in chunks of 100 each.

$batch = new BatchBuilder();

$batch->setTitle('Running batch process.')

->setFinishCallback([BatchClass::class, 'batchFinished'])

->setInitMessage('Commencing')

->setProgressMessage('Processing...')

->setErrorMessage('An error occurred during processing.');

// Create 10 chunks of 100 items.

$chunks = array_chunk(range(1, 1000), 100);

// Process each chunk in the array.

foreach ($chunks as $id => $chunk) {

$args = [

$id,

$chunk,

TRUE,

];

$batch->addOperation([BatchClass::class, 'batchProcess'], $args);

}

batch_set($batch->toArray());

The key difference here is that we will also be passing in a flag to the arguments that will cause the original batch processes to initiate a new batch process whilst the batch is running.

With that setup we can look at the batch operations.

Adding To The Batch Operations

The parameters we set up for the batchProcess() method has an additional $addBatch flag that is set to TRUE for all of our initial batch operations.

During the normal run of the batch process method we look at this flag and then initiate a new batch process using the BatchBuilder class if it is set to TRUE. The main difference here is that we set the flag to FALSE in the inner operations so that they don't initiate additional batch runs (which would cause an infinite loop).

The rest of the batch operations is the same as in previous articles where we are just rolling a dice to decide on the outcome of the operation to simulate issues.

When we call batch_set(), Drupal will make a call to batch_get() to see if a batch operation is already running. If it is then _batch_append_set() will be called, which will append the new batch run directly after the one that is running. This means that we can setup and initiate a new batch process during the run of the current process without interrupting or disrupting the current operations. The new operations will be run at the end of the current process.

Here the is section of the batchProcess() function that runs the additional batch creation and batch_set() operations. As you can see it is just calling the exact same BatchBuilder code that we normally use to start batch processes, except this time it is being called within the processing method.

public static function batchProcess(int $batchId, array $chunk, bool $addBatch, array &$context): void {

// -- Set up batch sandbox.

if ($addBatch === TRUE) {

// For every "original" batch run we add another chunk of numbers to be

// processed. This simulates generating an additional bach run inside an

// existing batch.

$batch = new BatchBuilder();

$batch->setTitle('Running batch process.')

->setFinishCallback([BatchClass::class, 'batchFinished'])

->setInitMessage('Commencing')

->setProgressMessage('Processing...')

->setErrorMessage('An error occurred during processing.');

// Add a new chunk to process. Sending the third argument as false here

// means we don't start another batch inside this.

$args = [

$batchId + 1000,

range(1, 100),

FALSE,

];

$batch->addOperation([BatchClass::class, 'batchProcess'], $args);

batch_set($batch->toArray());

}

// --- Continue the batch processing.

}

After this batch operation has been completed we will have processed 2,000 items. This is the original 1,000 items from the first batch and a 100 items from the additional sub-batch processing runs.

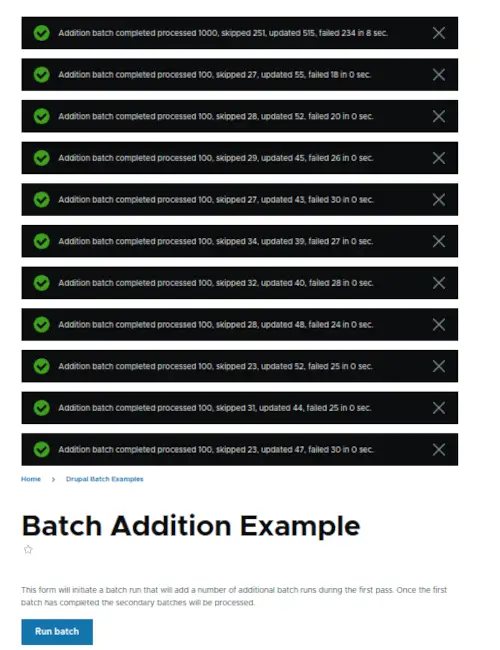

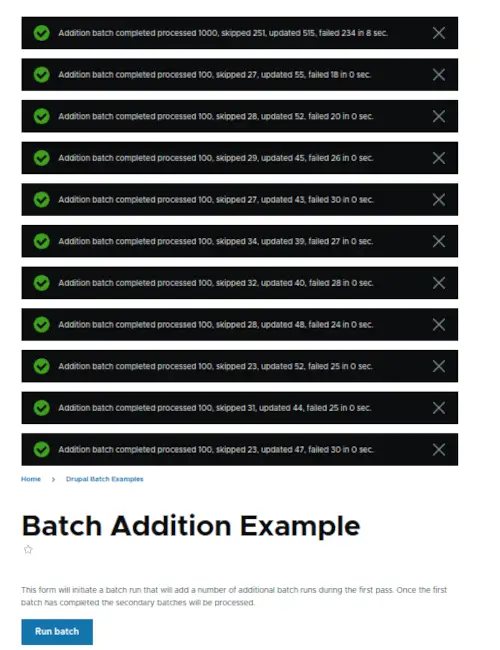

The fact that the additional batch runs are performed after the current run means that their finished methods are also run separately. This does cause a strange effect where you get 11 messages printed out once this operation has finished (1 for the initial batch run and another 10 for the additional batch runs). This causes the batch finished page to look like this.

You can turn this off by ether not printing a final total message or by not passing a finish callback to the inner batch setup. I have left this in the example to show clearly what is going on.

This batch addition is a useful part of the Batch API as it means that any batch operation will be performed, even if Drupal is currently running one.

How Is This Useful?

I'd imagine that some of you are reading this and thinking "why would you do this?". Well, this technique does seem somewhat esoteric, but it does have its uses. Adding additional batch operations is very useful if you do not know what the "finished" state would look like or if you are performing recursive operations. This means that you can't preload the batch with the known elements, and using the Batch API finished parameter wouldn't be applicable either.

Any batch process that requires a recursive process can be setup in this way with the initial seed being only the tip of the known processes that will be performed during the operations. The recursive nature of the operations means that you don't know for sure at the start of the batch process how many items you need to process until you have processed them.

For example, I was recently tasked with importing thousands of links from a sitemap index file. A sitemap.xml file is a file that lists the available links on a site in an XML document, but can also an index file that links to other sitemap.xml files, which in turn can also be index files.

The user would enter the sitemap.xml index location and this would trigger a batch process that would trigger additional batch processes for every additional sitemap.xml index file found in the index. Some of these sitemap.xml files were also index files and so we needed to kick off additional processing runs to process those files as well. All of the links in the sitemap.xml files were then saved to a database table for later analysis.

Here the the important part of the batch process method that parses the sitemap.xml file and then either creates an additional batch operation or saves the link to the database. The library in use here is the Sitemap Checker PHP library that I wrote prior to using it in this task. Using this library really sped up the development of this task since it had most of the sitemap parsing code built in. The "Link" entity here is a simple custom entity that stores information from the parsed URL.

$client = \Drupal::service('http_client');

$sitemapSource = new SitemapXmlSource($client);

$sitemapData = $sitemapSource->fetch($sitemap);

if ($sitemapSource->isSitemapIndex()) {

$sitemapIndexXmlParse = new SitemapIndexXmlParser();

$sitemapList = $sitemapIndexXmlParse->parse($sitemapData);

$batch = new BatchBuilder();

$batch->setTitle('Importing processing of sitemaps')

->setFinishCallback([BatchLinkImport::class, 'batchFinished'])

->setInitMessage('Commencing')

->setProgressMessage('Completed @current')

->setErrorMessage('An error occurred during processing.');

// Initiate new batch to process the items in compressed sitemap.

foreach ($sitemapList as $key => $sitemapUrl) {

$args = [

$key,

$sitemapUrl->getRawUrl(),

];

$batch->addOperation('\Drupal\link_audit\Batch\BatchLinkImport::processSitemapsBatch', $args);

}

batch_set($batch->toArray());

}

else {

$sitemapParser = new SitemapXmlParser();

$list = $sitemapParser->parse($sitemapData);

foreach ($list as $url) {

$context['results']['progress']++;

$link = Link::create(

[

'label' => $url->getPath(),

'url' => $url->getRawUrl(),

'path' => $url->getPath(),

'location' => $sitemap,

]

);

$link->save();

}

}

This process could be further improved by adding additional operations that write entries to the database instead of performing the write operation here.

Using this technique meant that the batch run could process the 40,000+ links without knowing before hand how many links were available to process, and did so without timing out or running out of memory. The batch process just churned through the sitemap.xml files until it had run out of either sitemap.xml files or links to process.

Conclusion

In this article we looked at setting up an additional batch processing run whilst a batch process run was already underway. Whilst it isn't advisable to use this technique in every batch process there are some use cases that make this a useful part of your batch toolkit.

Using this additional batch run technique is good if you have a recursive operation to perform. I have found that this normally involves API or web calls of some kind where the eventual outcome (other than there being no more items to process) isn't known at the start of the operation.

The important part to understand here is that any additional batch operations you create will be appended to the end of the current batch run. This means that you can't alter the current batch run once it is underway, with the caveat being that if you throw an exception your batch will stop entirely. Not being able to alter the batch operations makes sense if you think about the underlying data structure. The Drupal Batch API is built upon the Drupal Queue API, which means that once the queue is in place it isn't straightforward to fiddle with it (at least not without making direct database queries, which I don't advise doing).

All of the code for this module can be found in the batch addition example module on GitHub. You can also see all of the source code for all of the other modules in this series in the Drupal batch examples repository.

In the next article in this series we will take a look at how the Batch API is used within Drupal itself to perform potentially complex tasks.

Add new comment